How to deploy Kubernetes vClusters inside your namespaces.

A couple of days ago I stumbled upon DigitalOcean Kubernetes Challenge. It sounds like a great idea. I loved how DO is trying to open up more opportunities to the tech community to play and learn Kubernetes through these hands-on challenges.

So I thought, I need to do my share of helping people myself. So I’ve decided to choose one of their Kubernetes expert Challenges and solve and document it. I also thought I’d take it one step further and implement everything in Infrastructure as Code (IaC) using Terraform.

In this blog, I’ll explain the idea behind Kubernetes vClusters. How to get started with using them and How to provision your first Kubernetes Cluster in Digitial ocean. So Let’s get started.

Provision your first Kubernetes Cluster in Digitial ocean

We’ll be using Terraform for this task. Terraform provides a module for DigitalOcean. You can find more info here. The Kubernetes specific part can be found here.

So let’s start with this script that provisions Kubernetes Cluster:

Create a new main.tf file containing:

# Deploy the actual Kubernetes cluster

resource "digitalocean_kubernetes_cluster" "kubernetes_cluster" {

name = "terraform-do-cluster"

region = "ams3"

version = "1.19.15-do.0"

tags = ["my-tag"]

# This default node pool is mandatory

node_pool {

name = "default-pool"

size = "s-2vcpu-4gb"

auto_scale = false

node_count = 2

tags = ["node-pool-tag"]

}

}

Then create a new file versions.tf, We’ll add DO terraform provider to this file:

# Configure the DigitalOcean Provider

terraform {

required_providers {

digitalocean = {

source = "digitalocean/digitalocean"

version = "~> 2.0"

}

}

}

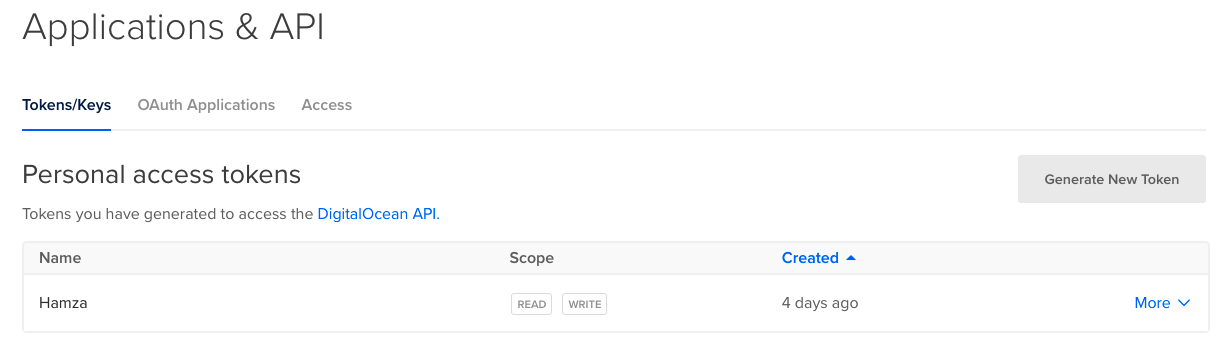

Before we run this script we need to create a new personal access token. So login to your Digital ocean account and head to the API section. Then click on “Generate New Token” button and generate a token with Read and Write Scope.

Create DigitalOcean Tocken

Then, We need to set up the DigitalOcean Terraform provider, It’s very simple. You need to provide your previous DigitalOcean personal access token. You can either use a variable and the prompt (Don’t commit your token!) or environment variables. The provider automatically uses DIGITALOCEAN_TOKEN and DIGITALOCEAN_ACCESS_TOKEN if they exist.

So let’s create an environment variable with the name DIGITALOCEAN_TOKEN

export DIGITALOCEAN_TOKEN=<Personal Tocken>

Now let’s create the Kubernetes Cluster:

First, run terraform init to download the required providers

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of digitalocean/digitalocean from the dependency lock file

- Installing digitalocean/digitalocean v2.16.0...

- Installed digitalocean/digitalocean v2.16.0 (signed by a HashiCorp partner, key ID F82037E524B9C0E8)

Partner and community providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://www.terraform.io/docs/cli/plugins/signing.html

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Next, run terraform plan to verify what resources are planned to be created:

$ terraform plan

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# digitalocean_kubernetes_cluster.kubernetes_cluster will be created

+ resource "digitalocean_kubernetes_cluster" "kubernetes_cluster" {

+ cluster_subnet = (known after apply)

+ created_at = (known after apply)

+ endpoint = (known after apply)

+ ha = false

+ id = (known after apply)

+ ipv4_address = (known after apply)

+ kube_config = (sensitive value)

+ name = "terraform-do-cluster"

+ region = "ams3"

+ service_subnet = (known after apply)

+ status = (known after apply)

+ surge_upgrade = true

+ tags = [

+ "my-tag",

]

+ updated_at = (known after apply)

+ urn = (known after apply)

+ version = "1.19.15-do.0"

+ vpc_uuid = (known after apply)

+ maintenance_policy {

+ day = (known after apply)

+ duration = (known after apply)

+ start_time = (known after apply)

}

+ node_pool {

+ actual_node_count = (known after apply)

+ auto_scale = false

+ id = (known after apply)

+ name = "default-pool"

+ node_count = 2

+ nodes = (known after apply)

+ size = "s-1vcpu-2gb"

+ tags = [

+ "node-pool-tag",

]

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply" now.

And finally, let’s apply the configuration through terraform apply

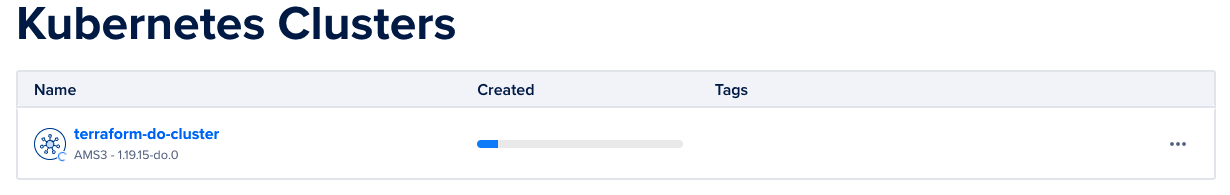

During cluster provisioning, you will see the progress bar moving until the end.

Connect to the Kubernetes cluster

Now let’s connect to our Kubernetes cluster. To achieve that, we need to download the kubeconfig file. We will use curl to do this.

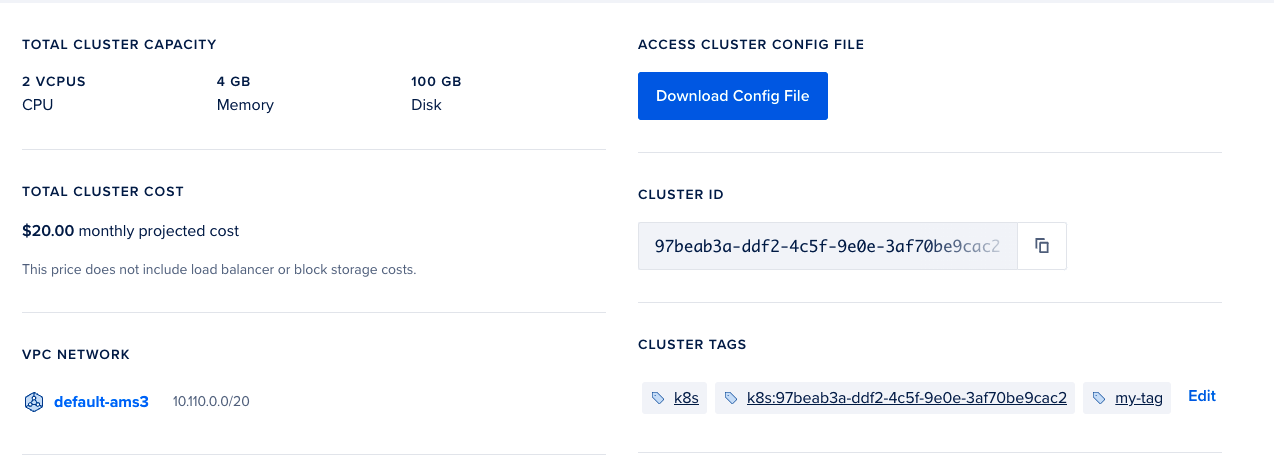

First, let’s create an environment variable with our cluster ID.

$ export CLUSTER_ID=<Cluster ID>

Note: You get the Cluster ID from your Kubernetes cluster dashboard.

Next, let’s go ahead and download the Kube config file

$ curl -X GET -H "Content-Type: application/json" -H "Authorization: Bearer $DIGITALOCEAN_TOKEN" "https://api.digitalocean.com/v2/kubernetes/clusters/$CLUSTER_ID/kubeconfig" > config

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2080 0 2080 0 0 3537 0 --:--:-- --:--:-- --:--:-- 3531

This will download a config file in your current directory.

Let’s now see if everything works as expected:

$ export KUBECONFIG=./config

$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-8bx4g 1/1 Running 0 19m

kube-system cilium-kvrhq 1/1 Running 0 19m

kube-system cilium-operator-7fd9d7b9dc-7mb6v 1/1 Running 0 22m

kube-system coredns-57877dc48d-4nh5j 1/1 Running 0 22m

kube-system coredns-57877dc48d-5g6r8 1/1 Running 0 22m

kube-system csi-do-node-7qpwp 2/2 Running 0 19m

kube-system csi-do-node-l58dn 2/2 Running 0 19m

kube-system do-node-agent-6w975 1/1 Running 0 19m

kube-system do-node-agent-lvvbq 1/1 Running 0 19m

kube-system kube-proxy-85mtl 1/1 Running 0 19m

kube-system kube-proxy-8zgpd 1/1 Running 0 19m

Hooray! We built our first Kubernetes Cluster and it’s working. Now we need to provision our vClusters, But before we do that let’s check the current Kubernetes version:

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.1", GitCommit:"632ed300f2c34f6d6d15ca4cef3d3c7073412212", GitTreeState:"clean", BuildDate:"2021-08-19T15:38:26Z", GoVersion:"go1.16.6", Compiler:"gc", Platform:"darwin/arm64"}

Server Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.15", GitCommit:"58178e7f7aab455bc8de88d3bdd314b64141e7ee", GitTreeState:"clean", BuildDate:"2021-09-15T19:18:00Z", GoVersion:"go1.15.15", Compiler:"gc", Platform:"linux/amd64"}

WARNING: version difference between client (1.22) and server (1.19) exceeds the supported minor version skew of +/-1

From the Server Version above, We notice that the current version is Kubernetes 1.19

What are Virtual Kubernetes Clusters?

Virtual clusters are fully working Kubernetes clusters that run on top of other Kubernetes clusters. Compared to fully separate “real” clusters, virtual clusters do not have their own node pools. Instead, they are scheduling workloads inside the underlying cluster while having their own separate control plane.

Now imagine this scenario. you provisioned your actual Kubernetes cluster a while back with version 1.19.15-do.0 and you want to upgrade it to the latest version. But, Before you make the jump you decided that you need to test your services on the newest k8s cluster first to make sure that it’s working as expected. What will you do?

Well… You have two options:

- Create a new Kubernetes cluster in DO with the latest version and test your services

- Create a new virtual Kubernetes Clusters inside your current cluster and test your services inside it.

In this article, we’ll choose the second option. Mainly because it’s the cheaper option and obviously we want to play with these shiny vClusters:)

vcluster CLI

Now the first thing we need to do is install vCluster CLI. Instruction for installing it depends on the OS that you’re using. vCluster documentions explain it in depth.

After that, It’s really easy to create a new vCluster. Just run this command:

$ vcluster create vcluster-1 -n host-namespace-1 --k3s-image rancher/k3s:v1.22.4-k3s1

it creates a vcluster in namespace host-namespace-1 that can be accessed through port forwarding.

Now that we deployed a vcluster, let’s connect to it. The easiest option to connect to your vcluster is by starting port-forwarding to the vcluster’s service via:

$ vcluster connect vcluster-1 -n host-namespace-1

Note: If you terminate the vcluster connect command, port-forwarding will be terminated, so keep this command open while you are working with your vcluster.

Now you’ll get a kubeconfig.yaml file in your current directory. You can use this file to connect to your vCluster.

First export the new kubeconfig.yaml

$ export KUBECONFIG=./kubeconfig.yaml

Then let’s list the pods

$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-85cb69466-926hq 1/1 Running 0 4m45s

From the above output, we can conclude that we using the vCluster. Finally!

As you see there’s only one pod coredns which is the DNS service that K8S uses.

Now let’s check the version:

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.1", GitCommit:"632ed300f2c34f6d6d15ca4cef3d3c7073412212", GitTreeState:"clean", BuildDate:"2021-08-19T15:38:26Z", GoVersion:"go1.16.6", Compiler:"gc", Platform:"darwin/arm64"}

Server Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.4+k3s1", GitCommit:"bec170bc813e342cdba9710a1a71f8644c948770", GitTreeState:"clean", BuildDate:"2021-11-29T17:11:06Z", GoVersion:"go1.16.10", Compiler:"gc", Platform:"linux/amd64"}

WoW. It’s Kubernetes version 1.22. If you remember our original cluster was using version 1.19. So now you can test your application on this new cluster and make sure that it works on the latest Kubernetes version. Now that will help ease your mind when you upgrade your main cluster!